What is the difference between a ND-Buffer and a G-Buffer?

I'm noob at WebGL. I read in several posts of ND-Buffers and G-Buffers as if it were a strategic choice for WebGL development.

How are ND-Buffers and G-Buffers related to rendering pipelines? Are ND-Buffers used only in forward-rendering and G-Buffers only in deferred-rendering?

A JavaScript code example how to implement both would be useful for me to understand the difference.

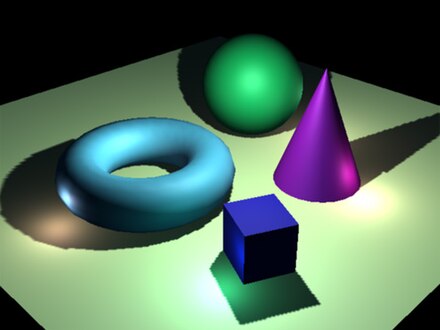

G-Buffers are just a set of buffers generally used in deferred rendering.

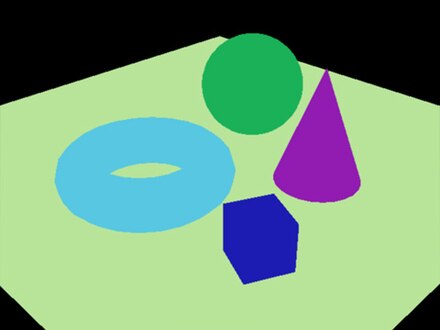

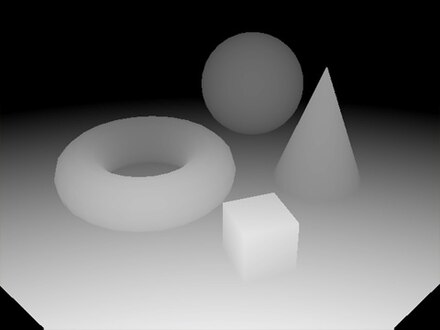

Wikipedia gives a good example of the kind of data often found in a g-buffer

Diffuse color info

World space or screen space normals

Depth buffer / Z-Buffer

The combination of those 3 buffers is referred to as a "g-buffer"

Generating those 3 buffers from geometry and material data you can then run a shader to combine them to generate the final image.

What actually goes into a g-buffer is up to the particular engine/renderer. For example one of Unity3D's deferred renders contains diffuse color, occlusion, specular color, roughness, normal, depth, stencil, emission, lighting, lightmap, reflection probs.

An ND buffer just stands for "normal depth buffer" which makes it a subset of what's usually found in a typical g-buffer.

As for a sample that's arguably too big for SO but there's an article about deferred rendering in WebGL on MDN

- Why my code behave differently with transferControlToOffscreen or new OffscreenCanvas?

- JavaScript How to Dynamically Move Div by Clicking and Dragging

- Replace or Strip a specific character between two characters in javascript using regex

- Get all non-unique values (i.e.: duplicate/more than one occurrence) in an array

- Get selected row value using JavaScript

- How to have text already in the input and off when on focus?

- max number of concurrent file downloads in a browser?

- chart.js gradient background problems with browser zoom

- Objects as keys in maps: Memory duplication?

- Convert Javascript value into a link?

- How can I change the line wrapping on the Bitbucket Pipelines dashboard with a userscript?

- Multiple lightbox galleries

- How to debug Javascript error?

- Why is jest code coverage report showing inconsistent values?

- How to capture a backspace on the onkeydown event

- jQuery plugin tutorial explanation

- Does JavaScript `arguments` contain `this`?

- understanding basics of arguments in javascript

- Why is array slice method called using "call"?

- Why are JavaScript Arguments objects mutated by assignment to parameter?

- Using arguments pseudo-parameter as a writeable thing

- Is it safe to pass 'arguments' to 'apply()'

- Unexpected "arguments" property on object

- Is it not possible to set arguments that have not been provided, using arguments property

- Javascript 'arguments' Keyword

- How to fix this issue with Sass in my React project?

- Why isn't a function's arguments object an array in Javascript?

- Why can't I call an array method on a function's arguments?

- Why doesn't .join() work with function arguments?

- How to get a slice from "arguments"