How to find the parameter to optimize (maximize) the expected value of a function with random variable globally (using python mystic)

I'm new in asking at StackOverflow so sorry if using it in a wrong manner.

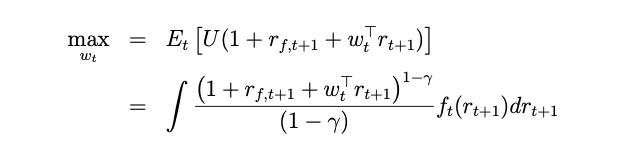

I have a maximize problem in a paper just like this:

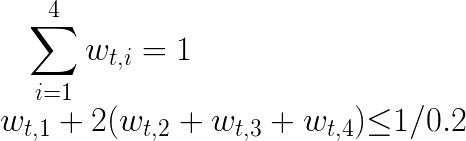

and some constraints:

where r_f,t+1 and γ are given constant, r_t+1 is a multidimensional random variable vector(4 dimension here), f_t(r_t+1) is the multidimensional distribution function of the r_t+1. The problem is to find a weight vector w_t to maximize the expected value.

The paper said how it solves the problem, "The integrals are solved by simulating 100,000 variates for the 4 factors(the r_t+1 vector) from the multivariate conditional return distribution f_t(rt+1)". I know how to generate random numbers and get the mean from a distribution, but don't exactly know about how to use it in an optimization problem.

I have tried using some random numbers and return the mean value as the objective function, like the example below:

import numpy as np

rands = np.random.multivariate_normal(mean=[0, 0, 0, 0],

cov=[[1, 0, 0, 0], [0, 1, 0, 0],

[0, 0, 1, 0], [0, 0, 0, 1]],

size=1000)

def expect_value(weight, rf, gamma, fac_ndarr):

weight = np.asarray(weight)

utility_array = (1 + rf + fac_ndarr.dot(weight))**(1 - gamma) / (1 - gamma)

expected_value = utility_array.mean()

return -expected_value

And the next, I use the module mystic to solve the global minimum.

from mystic.solvers import diffev2

from mystic.symbolic import (generate_conditions, generate_penalty,

generate_constraint, generate_solvers, simplify)

equation = """

x0 + x1 + x2 + x3 == 1

x0 + 2*(x1 + x2 + x3) - (1. / 0.2) <= 0

"""

pf = generate_penalty(generate_conditions(equation), k=1e20)

cf = generate_constraint(generate_solvers(simplify(equation)))

bounds = [(0, 1)] * 4

# Here the given constant `rf` and `gamma` are 0.15 and 7,

# and they were passed to the parameter `args` with `rands`,

# the `args` will be passed to the `cost`funtion.

result = diffev2(cost=expect_value,

args=(0.15, 7, rands),

x0=bounds,

bounds=bounds,

constraint=cf,

penalty=pf,

full_output=True,

npop=40)

My real problem generated 50000 random numbers, and it seems can't find the exact global minimum solution, since every time the code finds a different solution and the value of objective function differs. And even after reading the documentation and changing the parameter "npop" to 1000 and the "scale"(multiplier for mutations on the trial solution) to 0.1, it also didn't work as expected.

Is the way I used to solve the optimization problem wrong? What is the exact way to use simulation data in maximizing an expected value? Or there's something wrong in using the mystic module? Or, is there another efficient way to solve the maximization problem like above?

Thanks very much for your help!

mystic is built for this exact type of problem, as it can optimize in product measure space. See: https://github.com/uqfoundation/mystic/blob/master/examples5/TEST_OUQ_surrogate_diam.py

- How to parse a xml feed using feed parser python?

- How to ignore invalid values when creating model instance

- Another question about daylight saving time in multi stack app

- How to write an f-string on multiple lines without introducing unintended whitespace

- Google API Youtube channel list limit result=5 (https://www.googleapis.com/youtube/v3/search)

- Saving the result of an SQLite query as a Python variable

- PIL - Images not rotating

- What does turtle.Screen() actually do?

- What are 0xaa and 0x55 doing?

- Is it possible to type-hint a compiled regex in python?

- Plotting Y data vs X data with alternating colors for each section in Python

- Unable to import pyspark.pipelines module

- What's the best way to parse command line arguments?

- how to output xlsx generated by Openpyxl to browser?

- "turtle" package could not be installed for Python 3 using pip

- Dictionary key name from combination of string and variable value

- Usage of __slots__?

- How to use typing.Annotated

- How do I split data out from one column of a pandas dataframe into multiple columns of a new dataframe

- Flask Load New Page After Streaming Data

- how can I avoid storing a command in ipython history?

- tkinter TclError After Update to Fedora 43

- ChatGPT API - creating longer JSON response bigger than gpt-3.5-turbo token limit

- How does attribute access (.) actually work internally?

- Using create_retrieval_chain due to RetrievalQA deprecation

- Hollow Diamond in Python with recursion

- Polars equivalent for casting strings like Pandas to_numeric

- Getting SQLAlchemy's datetime function to work with an SQLite database

- How can I efficiently `fill_null` only certain columns of a DataFrame?

- Adding widgets to a screen subclass in .py file