Comparing two algorithms that use differently the same functions

I have a set o rules and all the rules extract the same type of instances (names of cities for example) from a long text. I'm comparing the following two algorithms:

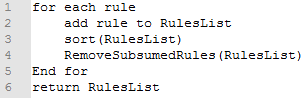

Algorithm1:

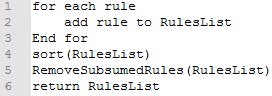

Algorithm2:

knowing that:

- each rule has the property "TP" which is the number of "correct extracted instances".

- The function sort(): sorts RulesList in decreasing order according to TP values.

- The function RemoveSubsumedRules() removes a rule R2 from RulesList if its "correct extracted instances" set is included the the one of a rule R1 (in RulesList).

is Algorithm1 the same as Algorithm2? they return the same RulesList (sames rules) in the end?

Or does Algorithm1 remove more rules than Algorithm2? (because RemoveSubsumedRules() is called in every iteration).

Thanks.

Both algorithms do the same thing (in terms of result produced).

To see this, we can prove that in the end, RuleList constsists of exactly those rules which are not subsumed by any other rule. In case of Algorithm 2 this is clear, so let us prove this for Algorithm 1.

First, it is easy to see that those rules are indeed in the final RuleList, as they cannot be deleted by RemoveSubsumedRules.

Secondly, suppose that there is some rule R1 in the final RuleList which is subsumed by some other rule R2 (not necessarily in the RuleList). Then there exists some rule R3 different than R1 which subsumes R1 and is not subsumed by any other rule (note that R3 might be equal to R2). We already know that R3 is in the final RuleList, because no other rule subsumes it. But in this case, when the last call to RemoveSubsumedRules was made, both R1 and R3 were in the RuleList, so R1 should have been removed, which we supposed wasn't the case. We have reached a contradiction, so no rule such as R1 can exist in the final RuleSet.

- Storing a list of one million prime numbers in 33,334 bytes

- Ordering points that roughly follow the contour of a line to form a polygon

- How to construct a binary tree from a values list?

- Algorithm for Determining Tic Tac Toe Game Over

- Finger Counting Problem to find the name of the finger based on the given number

- TicTacToe win checking logic

- How do you detect Credit card type based on number?

- Ukkonen's suffix tree algorithm in plain English

- How to detect cycles in a directed graph using the iterative version of DFS?

- Previous power of 2

- Creating a random tree?

- LeetCode #849: Maximize Distance to Closest Person

- Peak signal detection in realtime timeseries data

- Given an array of numbers including positive and negative, how to find the shortest subarray that has a sum >= target using prefixSum and bisect?

- Approaches To Fast Execution Of Runtime Chain Of Functions?

- How to be sure I am implementing/simulating a paper/algorithm correctly?

- From a list of groups of words, find if any of them appears in a string and return which word group and which index in the string?

- Sort an array as [1st highest number, 1st lowest number, 2nd highest number, 2nd lowest number...]

- Sorting algorithms for data of known statistical distribution?

- Explanation of Merge Sort for Dummies

- How to create an algorithm to find all combinations for a given formula (add 2 or substract 3)

- Regex Pattern Starting from X Pattern until X Pattern

- How to find the longest substring with equal amount of characters efficiently

- Reasons for using a Bag in Java

- Design an efficient algorithm to sort 5 distinct keys in fewer than 8 comparisons

- Fast & accurate atan/arctan approximation algorithm

- Time Complexity Of A Recursive Function

- How to change Math.atan2's zero axis to any given angle, while keeping it in the range of [-π, π]?

- Search for closest-distance multi-point array in array of multi-point arrays

- Leetcode Python 208 -- memory not clearing from previous test case?